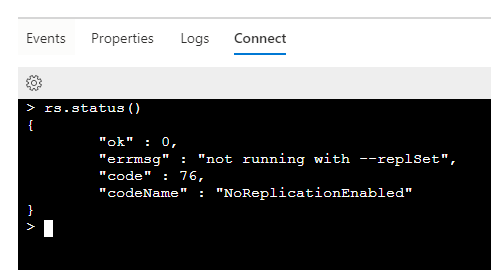

Hi there. I tried following the setup for kubernetes on google cloud, and I after a lot of back and forth, I have managed to get it to start up – except that it cannot connect to the mongodb database. The inner error is very confusing, at it says that the status is connected:

—> System.TimeoutException: A timeout occured after 30000ms selecting a server using CompositeServerSelector{ Selectors = MongoDB.Driver.MongoClient+AreSessionsSupportedServerSelector, LatencyLimitingServerSelector{ AllowedLatencyRange = 00:00:00.0150000 } }. Client view of cluster state is { ClusterId : “1”, ConnectionMode : “ReplicaSet”, Type : “ReplicaSet”, State : “Connected”, Servers : [{ ServerId: “{ ClusterId : 1, EndPoint : “Unspecified/mongo-0.mongo:27017” }”, EndPoint: “Unspecified/mongo-0.mongo:27017”, State: “Connected”, Type: “ReplicaSetGhost”, WireVersionRange: “[0, 5]”, LastUpdateTimestamp: “2020-03-25T12:16:57.4123533Z” }] }.

at MongoDB.Driver.Core.Clusters.Cluster.ThrowTimeoutException(IServerSelector selector, ClusterDescription description)

at MongoDB.Driver.Core.Clusters.Cluster.WaitForDescriptionChangedHelper.HandleCompletedTask(Task completedTask)

at MongoDB.Driver.Core.Clusters.Cluster.WaitForDescriptionChangedAsync(IServerSelector selector, ClusterDescription description, Task descriptionChangedTask, TimeSpan timeout, CancellationToken cancellationToken)

at MongoDB.Driver.Core.Clusters.Cluster.SelectServerAsync(IServerSelector selector, CancellationToken cancellationToken)

at MongoDB.Driver.MongoClient.AreSessionsSupportedAfterSeverSelctionAsync(CancellationToken cancellationToken)

at MongoDB.Driver.MongoClient.AreSessionsSupportedAsync(CancellationToken cancellationToken)

at MongoDB.Driver.MongoClient.StartImplicitSessionAsync(CancellationToken cancellationToken)

at MongoDB.Driver.MongoCollectionImpl1.UsingImplicitSessionAsync[TResult](Func2 funcAsync, CancellationToken cancellationToken)

at Squidex.Infrastructure.MongoDb.MongoRepositoryBase`1.InitializeAsync(CancellationToken ct) in /src/src/Squidex.Infrastructure.MongoDb/MongoDb/MongoRepositoryBase.cs:line 109

I am hoping someone can point me toward a cause for this, so I can start fixing my setup. The yaml for setup is almost entirely based on the kubernetes subfolder of the docker-squidex git repo, not using Helm, with values only changed for naming.