I have…

- [X] Read the following guideline: https://docs.squidex.io/01-getting-started/installation/troubleshooting-and-support. I understand that my support request might get deleted if I do not follow the guideline.

I’m submitting a…

- [X] Bug report

Current behavior

Trying to configure Elastic Search as the FullText store.

When starting squidex image, the following error appears in the logs and squidex image stops:

Unhandled exception. System.InvalidOperationException: Failed with ${“error”:{“root_cause”:[{“type”:“index_not_found_exception”,“reason”:“no such index [squidex]”,“resource.type”:“index_or_alias”,“resource.id”:“squidex”,“index_uuid”:“na”,“index”:“squidex”}],“type”:“index_not_found_exception”,“reason”:“no such index [squidex]”,“resource.type”:“index_or_alias”,“resource.id”:“squidex”,“index_uuid”:“na”,“index”:“squidex”},“status”:404}

—> Elasticsearch.Net.ElasticsearchClientException: Request failed to execute. Call: Status code 404 from: PUT /squidex/_mapping. ServerError: Type: index_not_found_exception Reason: “no such index [squidex]”

— End of inner exception stack trace —

at Squidex.Extensions.Text.ElasticSearch.ElasticSearchIndexDefinition.ApplyAsync(IElasticLowLevelClient elastic, String indexName, CancellationToken ct) in /src/extensions/Squidex.Extensions/Text/ElasticSearch/ElasticSearchIndexDefinition.cs:line 126

at Squidex.Hosting.InitializerHost.StartAsync(CancellationToken cancellationToken)

at Microsoft.Extensions.Hosting.Internal.Host.StartAsync(CancellationToken cancellationToken)

at Microsoft.Extensions.Hosting.HostingAbstractionsHostExtensions.RunAsync(IHost host, CancellationToken token)

at Microsoft.Extensions.Hosting.HostingAbstractionsHostExtensions.RunAsync(IHost host, CancellationToken token)

at Microsoft.Extensions.Hosting.HostingAbstractionsHostExtensions.Run(IHost host)

at Squidex.Program.Main(String[] args) in /src/src/Squidex/Program.cs:line 18

Expected behavior

ElasticSearch is correctly used, and index created.

Minimal reproduction of the problem

docker-compose.xml

services:

mymongodb:

image: mongo:5.0.5

container_name: mymongodb

networks:

my-net:

ipv4_address: 172.21.0.10

mysquidex:

image: "squidex/squidex:6.6.0"

container_name: mysquidex

environment:

- URLS__BASEURL=https://${SQUIDEX_DOMAIN}

- EVENTSTORE__TYPE=MongoDB

- EVENTSTORE__MONGODB__CONFIGURATION=mongodb://mymongodb

- STORE__MONGODB__CONFIGURATION=mongodb://mymongodb

- IDENTITY__ADMINEMAIL=${SQUIDEX_ADMINEMAIL}

- IDENTITY__ADMINPASSWORD=${SQUIDEX_ADMINPASSWORD}

- IDENTITY__GOOGLECLIENT=${SQUIDEX_GOOGLECLIENT}

- IDENTITY__GOOGLESECRET=${SQUIDEX_GOOGLESECRET}

- IDENTITY__GITHUBCLIENT=${SQUIDEX_GITHUBCLIENT}

- IDENTITY__GITHUBSECRET=${SQUIDEX_GITHUBSECRET}

- IDENTITY__MICROSOFTCLIENT=${SQUIDEX_MICROSOFTCLIENT}

- IDENTITY__MICROSOFTSECRET=${SQUIDEX_MICROSOFTSECRET}

- ASPNETCORE_URLS=http://+:5000

- FULLTEXT__TYPE=elastic

- FULLTEXT__ELASTIC__CONFIGURATION=http://elastic:password@172.21.0.12:9200

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:5000/healthz"]

start_period: 60s

depends_on:

- mymongodb

- myelasticsearch

networks:

my-net:

ipv4_address: 172.21.0.11

myelasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.1

container_name: myelasticsearch

restart: always

environment:

- "discovery.type=single-node"

- ELASTIC_PASSWORD=password

- cluster.name=elastic-cluster

ports:

- 9200:9200

networks:

my-net:

ipv4_address: 172.21.0.12

Environment

App Name: None

- [X] Self hosted with docker

Version: 6.6.0

Browser:

- Not Applicable

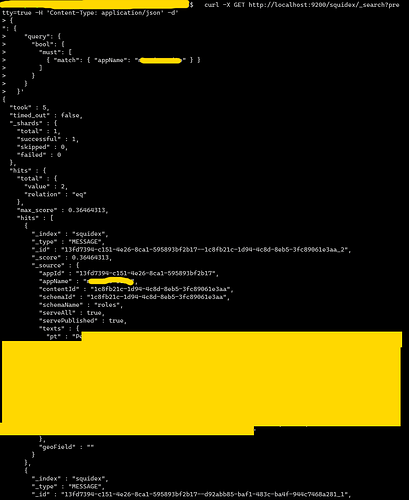

… Especially the “texts” fields.

… Especially the “texts” fields.