I have…

I’m submitting a…

- [ ] Regression (a behavior that stopped working in a new release)

- [x] Bug report

- [ ] Performance issue

- [ ] Documentation issue or request

Current behavior

Mongo is running as an Azure Container Instance. Squidex is running as an Azure App Service on the Basic tier. “Always On” is enabled on the app service. Upon hitting the app service in the morning, Azure spins up the container which has idled, and then Mongo performs a drop on all squidex collections and loses all of the existing content and users. eg:

{“t”:{"$date":“2022-07-13T07:14:15.029+00:00”},“s”:“I”,“c”:“COMMAND”,“id”:518070,“ctx”:“conn9331”,“msg”:“CMD: drop”,“attr”:{“namespace”:“SquidexContent.States_Contents_Published3”}}

{“t”:{"$date":“2022-07-13T07:14:15.031+00:00”},“s”:“I”,“c”:“STORAGE”,“id”:20318,“ctx”:“conn9331”,“msg”:“Finishing collection drop”,“attr”:{“namespace”:“SquidexContent.States_Contents_Published3”,“uuid”:{“uuid”:{"$uuid":“2a2344cf-7fad-48d3-b9c4-b69ccda0a04b”}}}}

Expected behavior

Squidex app service starts and uses existing MongoDB data

Minimal reproduction of the problem

Start MongoDB as an Azure container instance

Start Squidex as an Azure App Service

Leave overnight and then hit the Squidex URL

All Squidex apps are missing and the collections in Mongo are empty

Environment

App Name: magenta-as-uks-ppportalcms

- [x] Self hosted with docker

- [ ] Self hosted with IIS

- [ ] Self hosted with other version

- [ ] Cloud version

Version: [6.7.0.0]

Browser:

- [ ] Chrome (desktop)

- [ ] Chrome (Android)

- [ ] Chrome (iOS)

- [x] Firefox

- [ ] Safari (desktop)

- [ ] Safari (iOS)

- [ ] IE

- [ ] Edge

Others:

How have you deployed Squidex exactly? I think the tutorial creates a volume to persist data.

Hi Sebastian

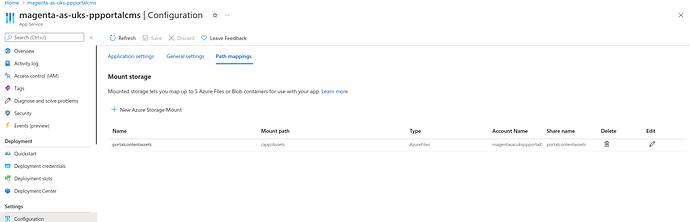

The Squidex App Service has an azure storage mount for assets, and the mongo container instance has an azure storage mount for /data/db.

When Squidex is started it logs a list of all settings. Can you provide this? Perhaps as a PM.

PM sent, thanks for the quick response

Have you made an upgrade to a newer version of Squidex or do you use the latest tag?

I think this could have happened when you use the latest tag:

- The first start of the service is with an older version.

- For whatever reason the service is restarted and pulls a newer version.

- This newer version has an incompatible database structure, so it tries to migrate automatically and this can mean dropping collections and then rebuilding them.

- The start of the service takes longer (because of the migration) and azure stops the startup.

Except that there is nothing in the code that would drop a collection.

Hi Sebastian

We build squidex latest (and Mongo 5.0.9) and push the images to an Azure container registry. This means that when the app service starts, it pulls from the container registry which will only have a newer version of the image if we have run the docker build pipeline. I can confirm that I did not build any new images overnight when this issue occurred (though it happens overnight every night so far).

I’ve posted the docker compose here:

*edit - the squidex context referenced in the compose is just:

FROM squidex/squidex:latest

EXPOSE 5000

Cheers,

Ian

Hi Sebastian

We added a routine to our backend that calls the Squidex API every three seconds. It’s not pretty, but it kept the Squidex/Mongo instances alive overnight. I’ll look into adding a similar script to a sidecar in the containers so they stay up without pings from our backend.

Still I do not see why it should drop data or why the migration is running.

Hi Sebastian

I stuck a watchdog sidecar in the container, and I can see that when it started, Squidex complained about a requested version not matching:

2022-07-28T15:28:13.409015314Z {

2022-07-28T15:28:13.409368620Z "logLevel": "Error",

2022-07-28T15:28:13.409385620Z "message": "Failed to execute method of grain.",

2022-07-28T15:28:13.409395320Z "timestamp": "2022-07-28T15:28:13Z",

2022-07-28T15:28:13.409399520Z "app": {

2022-07-28T15:28:13.409403520Z "name": "Squidex",

2022-07-28T15:28:13.409407620Z "version": "6.7.0.0",

2022-07-28T15:28:13.409411620Z "sessionId": "e215dffe-b9a1-4048-9935-7c349ecefcb5"

2022-07-28T15:28:13.409427421Z },

2022-07-28T15:28:13.409432521Z "category": "Squidex.Infrastructure.EventSourcing.Grains.EventConsumerGrain",

2022-07-28T15:28:13.409436521Z "exception": {

2022-07-28T15:28:13.409440121Z "type": "Squidex.Infrastructure.States.InconsistentStateException",

2022-07-28T15:28:13.409443821Z "message": "Requested version 1, but found 4.",

2022-07-28T15:28:13.411910464Z "stackTrace": " at Squidex.Infrastructure.MongoDb.MongoExtensions.UpsertVersionedAsync[T,TKey](IMongoCollection\u00601 collection, TKey key, Int64 oldVersion, Int64 newVersion, T document, CancellationToken ct) in /src/src/Squidex.Infrastructure.MongoDb/MongoDb/MongoExtensions.cs:line 135\n at Squidex.Infrastructure.States.MongoSnapshotStoreBase\u00602.WriteAsync(DomainId key, T value, Int64 oldVersion, Int64 newVersion, CancellationToken ct) in /src/src/Squidex.Infrastructure.MongoDb/States/MongoSnapshotStoreBase.cs:line 66\n at Squidex.Infrastructure.States.Persistence\u00601.WriteSnapshotAsync(T state) in /src/src/Squidex.Infrastructure/States/Persistence.cs:line 182\n at Squidex.Infrastructure.EventSourcing.Grains.EventConsumerGrain.DoAndUpdateStateAsync(Func\u00601 action, String position, String caller) in /src/src/Squidex.Infrastructure/EventSourcing/Grains/EventConsumerGrain.cs:line 231\n at Squidex.Infrastructure.EventSourcing.Grains.EventConsumerGrain.ActivateAsync() in /src/src/Squidex.Infrastructure/EventSourcing/Grains/EventConsumerGrain.cs:line 131\n at Squidex.Infrastructure.EventSourcing.Grains.OrleansCodeGenEventConsumerGrainMethodInvoker.Invoke(IAddressable grain, InvokeMethodRequest request) in /src/src/Squidex.Infrastructure/obj/Release/net6.0/Squidex.Infrastructure.orleans.g.cs:line 263\n at Orleans.Runtime.GrainMethodInvoker.Invoke() in /_/src/Orleans.Runtime/Core/GrainMethodInvoker.cs:line 104\n at Squidex.Infrastructure.Orleans.StateFilter.Invoke(IIncomingGrainCallContext context) in /src/src/Squidex.Infrastructure/Orleans/StateFilter.cs:line 48\n at Orleans.Runtime.GrainMethodInvoker.Invoke() in /_/src/Orleans.Runtime/Core/GrainMethodInvoker.cs:line 104\n at Squidex.Infrastructure.Orleans.LocalCacheFilter.Invoke(IIncomingGrainCallContext context) in /src/src/Squidex.Infrastructure/Orleans/LocalCacheFilter.cs:line 35\n at Orleans.Runtime.GrainMethodInvoker.Invoke() in /_/src/Orleans.Runtime/Core/GrainMethodInvoker.cs:line 104\n at Orleans.Runtime.GrainMethodInvoker.Invoke() in /_/src/Orleans.Runtime/Core/GrainMethodInvoker.cs:line 104\n at Squidex.Infrastructure.Orleans.ExceptionWrapperFilter.Invoke(IIncomingGrainCallContext context) in /src/src/Squidex.Infrastructure/Orleans/ExceptionWrapperFilter.cs:line 33\n at Orleans.Runtime.GrainMethodInvoker.Invoke() in /_/src/Orleans.Runtime/Core/GrainMethodInvoker.cs:line 104\n at Squidex.Infrastructure.Orleans.LoggingFilter.Invoke(IIncomingGrainCallContext context) in /src/src/Squidex.Infrastructure/Orleans/LoggingFilter.cs:line 47"

2022-07-28T15:28:13.411952765Z }

2022-07-28T15:28:13.411957165Z }

It then proceeds to loop iterate through versions (I presume doing an internal upgrade?), ending at version 94 15 hours later:

2022-07-29T06:50:42.524244957Z "message": "Caught and ignored exception: Squidex.Infrastructure.States.InconsistentStateException with message: Requested version 1, but found 94. thrown from timer callback GrainTimer. TimerCallbackHandler:Squidex.Domain.Apps.Entities.Rules.UsageTracking.UsageTrackerGrain-\u003ESystem.Threading.Tasks.Task \u003COnActivateAsync\u003Eb__5_0(System.Object)",

Cheers,

Ian

Do you have multiple instances running? This is an issue when you have multiple nodes, that should work in a cluster and communicate with each other, but cannot do that due to settings. It is in general very tricks with Azure App Services, which is one of the reasons, why I moved from Orleans to a more simpler architecture with 7.0: https://squidex.io/post/squidex-7.0-release-candidate-3-released

Nope, but I like the idea of using the az service bus in v7. I spun up a web-app on Friday evening with both mongo and squidex in a single container, and that survived the weekend, which is promising. It rules out horizontal scaling as we can’t have multiple mongo instances but at least we have the option of vertical scaling.