It is not the flow to reproduce the bug, but it is the flow how your content goes from the API to the database and then to the event consumers, where it fails with the error. What I meant: The JSON is serialized and deserialized at least once until it is read again from the database. So I have no idea how it can not be valid JSON anymore.

I agree with you. It is super weird. Not sure how data got saved with unexpected char and now it keeps failing and these backgound jobs can’t be started. I just to somehow recover from this situation. If there a way I can delete these records? When I call delete it fails, I have tried Update and that fails as well.

You could delete the events in the system. You have to go to Events2 collection and search for

StreamName: content--[APP_ID]--[CONTENT_ID] and then delete all these events. I would save them somewhere first.

Thanks, I have deleted the events and now all background jobs are Up and running.

Have you also saved them somewhere? So that I can have a look?

I haven’t saved as this was a test environment. I wasn’t aware you wanted those data.

Last sentence in my previous post, but next time …

Sure. I will do next time if this happens again

Its happening again. I still not sure from which record. I will try to find more on Monday

The stream position from the event consumers is also part of the event document.

I have exported the events which was causing this issue. Can you let me know how to share with you? Since this happened again in another environment most likely this is related to bug. If you can take a look and fix it will be great.

Record was giving same behavior, I was able to open it in Squidex but not able to update it anymore. I am worried if it happens in production environment then I will not have access to Mongo to delete the records to recover. Since its happening in lower environment I am able to fix it so far.

Send it as PM to me (just click my avatar)

We’re also seeing the same issue here

Squidex.Infrastructure.Json.JsonException: After parsing a value an unexpected character was encountered: \. Path 'data.Body.iv', line 1, position 230848.

---> Newtonsoft.Json.JsonReaderException: After parsing a value an unexpected character was encountered: \. Path 'data.Body.iv', line 1, position 230848.

at Newtonsoft.Json.JsonTextReader.ParsePostValue(Boolean ignoreComments)

at Newtonsoft.Json.JsonTextReader.Read()

at Squidex.Domain.Apps.Core.Contents.Json.ContentFieldDataConverter.ReadValue(JsonReader reader, Type objectType, JsonSerializer serializer) in C:\src\src\Squidex.Domain.Apps.Core.Model\Contents\Json\ContentFieldDataConverter.cs:line 35

at Squidex.Infrastructure.Json.Newtonsoft.JsonClassConverter`1.ReadJson(JsonReader reader, Type objectType, Object existingValue, JsonSerializer serializer) in C:\src\src\Squidex.Infrastructure\Json\Newtonsoft\JsonClassConverter.cs:line 26

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.DeserializeConvertable(JsonConverter converter, JsonReader reader, Type objectType, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.PopulateDictionary(IDictionary dictionary, JsonReader reader, JsonDictionaryContract contract, JsonProperty containerProperty, String id)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.CreateObject(JsonReader reader, Type objectType, JsonContract contract, JsonProperty member, JsonContainerContract containerContract, JsonProperty containerMember, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.CreateValueInternal(JsonReader reader, Type objectType, JsonContract contract, JsonProperty member, JsonContainerContract containerContract, JsonProperty containerMember, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.SetPropertyValue(JsonProperty property, JsonConverter propertyConverter, JsonContainerContract containerContract, JsonProperty containerProperty, JsonReader reader, Object target)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.PopulateObject(Object newObject, JsonReader reader, JsonObjectContract contract, JsonProperty member, String id)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.CreateObject(JsonReader reader, Type objectType, JsonContract contract, JsonProperty member, JsonContainerContract containerContract, JsonProperty containerMember, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.CreateValueInternal(JsonReader reader, Type objectType, JsonContract contract, JsonProperty member, JsonContainerContract containerContract, JsonProperty containerMember, Object existingValue)

at Newtonsoft.Json.Serialization.JsonSerializerInternalReader.Deserialize(JsonReader reader, Type objectType, Boolean checkAdditionalContent)

at Newtonsoft.Json.JsonSerializer.DeserializeInternal(JsonReader reader, Type objectType)

at Squidex.Infrastructure.Json.Newtonsoft.NewtonsoftJsonSerializer.Deserialize[T](String value, Type actualType) in C:\src\src\Squidex.Infrastructure\Json\Newtonsoft\NewtonsoftJsonSerializer.cs:line 67

--- End of inner exception stack trace ---

at Squidex.Infrastructure.Json.Newtonsoft.NewtonsoftJsonSerializer.Deserialize[T](String value, Type actualType) in C:\src\src\Squidex.Infrastructure\Json\Newtonsoft\NewtonsoftJsonSerializer.cs:line 67

at Squidex.Infrastructure.EventSourcing.DefaultEventDataFormatter.Parse(StoredEvent storedEvent) in C:\src\src\Squidex.Infrastructure\EventSourcing\DefaultEventDataFormatter.cs:line 41

at Squidex.Infrastructure.EventSourcing.DefaultEventDataFormatter.ParseIfKnown(StoredEvent storedEvent) in C:\src\src\Squidex.Infrastructure\EventSourcing\DefaultEventDataFormatter.cs:line 37

at Squidex.Infrastructure.EventSourcing.Grains.BatchSubscriber.<>c__DisplayClass11_0.<<-ctor>b__1>d.MoveNext() in C:\src\src\Squidex.Infrastructure\EventSourcing\Grains\BatchSubscriber.cs:line 78

The last Event in the Events2 collection is

{ _id: 'ffffb38d-46fb-448d-8a9a-f4d88e874c83',

Timestamp: Timestamp({ t: 1650962535, i: 104 }),

Events:

[ { Type: 'ContentDeletedEvent',

Payload: '{"contentId":"c57df302-9a54-4571-a956-63d6d583733b","schemaId":"a357329c-0f3a-45eb-aefd-b48163f8db32,topics","appId":"971d8888-0bbc-4114-9747-5cea08111abf,corecms","actor":"client:core-cms-gb-admin-client","fromRule":false}',

Metadata:

{ EventId: 'e20800ff-2b37-445d-b1fb-c711fed94eb3',

Timestamp: '2022-04-26T08:42:15Z',

AggregateId: '971d8888-0bbc-4114-9747-5cea08111abf--c57df302-9a54-4571-a956-63d6d583733b',

CommitId: 'ffffb38d-46fb-448d-8a9a-f4d88e874c83' } } ],

EventStreamOffset: -1,

EventsCount: 1,

EventStream: 'content-971d8888-0bbc-4114-9747-5cea08111abf--c57df302-9a54-4571-a956-63d6d583733b--deleted' }

If you go to the events collection you see the position, which is basically the timestamp. You can query events greater than this timestamp and then the first event should cause the problem. But I cannot reproduce it and need a backup or so to test and debug it.

Hi Sebastian!

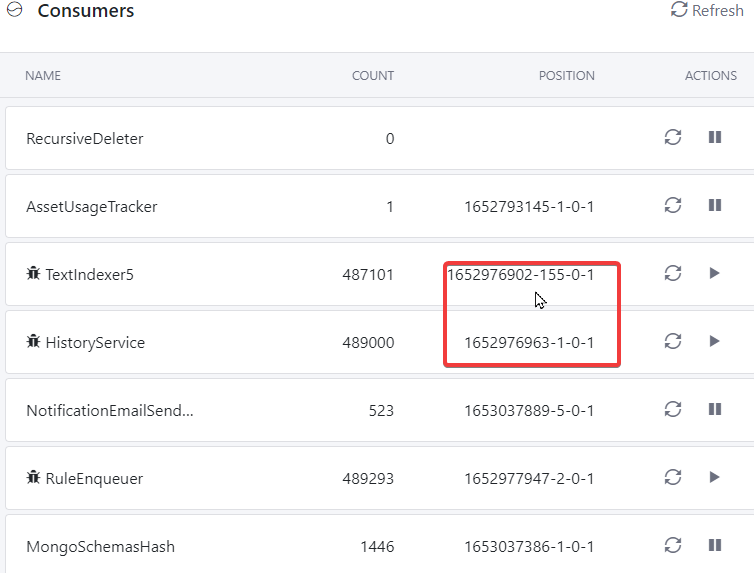

Are you referring to these positions?

If so should I be able to query the Events2 collection by filtering for the timestamp and then +1 document to find the document that is causing the issue?

In regards to getting a backup, I’m currently discussing this with the team internally to see the best way we can do this.

Yes, exactly. It should work like this.

Thanks, I’m currently trying to filter to obtain the information.

The position shows as 1652977027-2-1-2

What are the values in dashes after the UNIX timestamp?

I’m trying to query it by using

db.Events2.find({Timestamp: { $gte: Timestamp({ t: 1652977027, i: 2})}}).limit(2)

Every are grouped into commits. So 1-2 means the first event in a commit of 2 events.

For me it works like this: { "Timestamp" : { $gte : Timestamp(1653045275, 1) } } but your syntax might work as well.

Can you both share your mongo version and setup?